Leveraging AI for Efficient Infrastructure Management Solutions

Outline: 1) Introduction: Why AI-Driven Infrastructure Matters; 2) Automation in Infrastructure Management: Principles and Use Cases; 3) Predictive Analytics: Turning Data Into Foresight; 4) Smart Infrastructure: Architectures, Sensors, and Edge Intelligence; 5) Conclusion and Phased ROI Roadmap for Operations Leaders.

Introduction: Why AI-Driven Infrastructure Matters

Infrastructure is the quiet stage on which modern life performs—power lines, water mains, roads, and plants that must run steadily despite aging assets, climate volatility, tight budgets, and rising demand. Artificial intelligence offers practical levers to meet that complexity without asking for miracles: automating routine work, anticipating failures, and orchestrating responses across teams and systems. This isn’t a story about gadgets; it’s about reliability, safety, and resilience. Industry studies frequently report that predictive maintenance can reduce unplanned downtime by double‑digit percentages and lower maintenance costs while extending asset life. For frontline managers, those gains translate into fewer emergency callouts, more predictable schedules, and safer working conditions.

What makes AI particularly relevant now is the abundance of machine data—from vibration and temperature to power quality and flow rates—combined with falling sensor costs and mature modeling techniques. Even modest models trained on well‑labeled data can outperform rule‑based thresholds, catching subtle drifts long before alarms ring. As organizations move from reactive to proactive operations, a new pattern appears: daily decisions are shaped by forecasts, not surprises. A transit tunnel schedules ventilation fan overhauls during low‑traffic windows; a water network trims leaks by comparing predicted nighttime demand to real‑time anomalies; a microgrid smooths peaks to avoid penalties.

The value proposition remains grounded and measurable. Leaders can align AI with existing KPIs rather than inventing new ones, such as mean time between failures (MTBF), mean time to repair (MTTR), service level attainment, and energy intensity per output. A simple framing helps when communicating across technical and non‑technical teams: – Reduce unplanned downtime and safety incidents – Increase throughput and service reliability – Optimize maintenance spend and spare parts inventory – Lower energy use and emissions intensity. When these outcomes are made explicit, the conversation shifts from “Why AI?” to “Where first?”—an essential mindset for practical adoption.

Automation in Infrastructure Management: Principles and Use Cases

Automation in infrastructure spans a spectrum: from task automation that eliminates repetitive steps, to process orchestration that synchronizes workflows across departments, and finally to autonomy where systems act within guardrails. Each layer brings value, but they should be sequenced to fit operational maturity. A common starting point is digitizing routine inspections and work orders so that condition data flows automatically from field to maintenance planning. Once basic hygiene is in place, event‑driven automation links monitoring to response, e.g., generating a prioritized ticket when a transformer’s thermal profile departs its learned baseline.

Concrete use cases demonstrate how automation translates into outcomes: – Automated work order creation from anomaly scores, embedding asset criticality and risk in the priority – Dynamic routing for field crews that adjusts to traffic and weather inputs, cutting windshield time – Closed‑loop control for pumping stations that modulates variable frequency drives based on forecasted demand – Materials pre‑kitting triggered by predicted failure windows to ensure the right parts arrive with the crew – Shift‑hand‑off summaries that compile key events, model forecasts, and pending hazards into a concise brief. These automations reduce friction in the system, aligning attention and resources to where they matter most.

Human‑in‑the‑loop remains essential. Well‑designed interfaces present recommendations with context: confidence scores, leading signals, and expected impacts. Operators can accept, modify, or reject actions, feeding back outcomes to continually refine rules and models. This loop preserves accountability and builds trust while avoiding blind overreliance on algorithms. Performance metrics help quantify progress: reductions in MTTR, increased first‑time fix rates, fewer out‑of‑window maintenance activities, and improved schedule adherence. Even incremental changes can compound; shaving minutes off triage at scale may release days of capacity each month. Automation does not replace expertise—it elevates it by removing drudgery and clarifying decisions.

Predictive Analytics: Turning Data Into Foresight

Predictive analytics transforms historical and real‑time data into probabilities of future events. In infrastructure, this often centers on predicting failure modes, remaining useful life (RUL), and demand fluctuations. Models range from straightforward regression and survival analysis to time‑series methods and ensembles. The right choice depends on data quality, semantics, and latency needs, not on novelty. A pipeline vibration model may rely on spectral features from accelerometers; a substation model might incorporate weather, load, and breaker operations; a building energy forecast could blend occupancy patterns with temperature and solar irradiance.

Effective programs respect the full lifecycle: – Data readiness: align sensors with failure physics, calibrate sampling rates, and capture maintenance labels with standardized taxonomies – Feature engineering: extract domain‑relevant signatures such as harmonics, kurtosis, pressure transients, and thermal gradients – Model governance: benchmark baselines, track drift, and monitor error metrics like MAE, RMSE, and precision‑recall for rare events – Deployment and feedback: evaluate lead time (how far in advance a warning arrives) alongside false‑positive cost. Importantly, model accuracy must be paired with operational utility; a slightly less accurate model that gives earlier warnings may deliver greater value.

Practical results depend on thoughtful thresholds and economics. For example, a forecast that identifies a 70% failure probability within 14 days is actionable if parts and crews can be scheduled within that window; otherwise, the alert merely adds noise. Many organizations report reductions in unplanned outages and spare‑parts stockouts after adopting predictive planning, with maintenance costs trending down and asset availability rising. Limitations remain: cold‑start assets lack history, sensors drift, and changes in operating regimes can invalidate older patterns. Mitigations include transfer learning from similar assets, active learning that prioritizes new labels, and periodic model recalibration tied to seasonal shifts. Predictive analytics succeeds when it closes the loop between data, decision, and documented outcome.

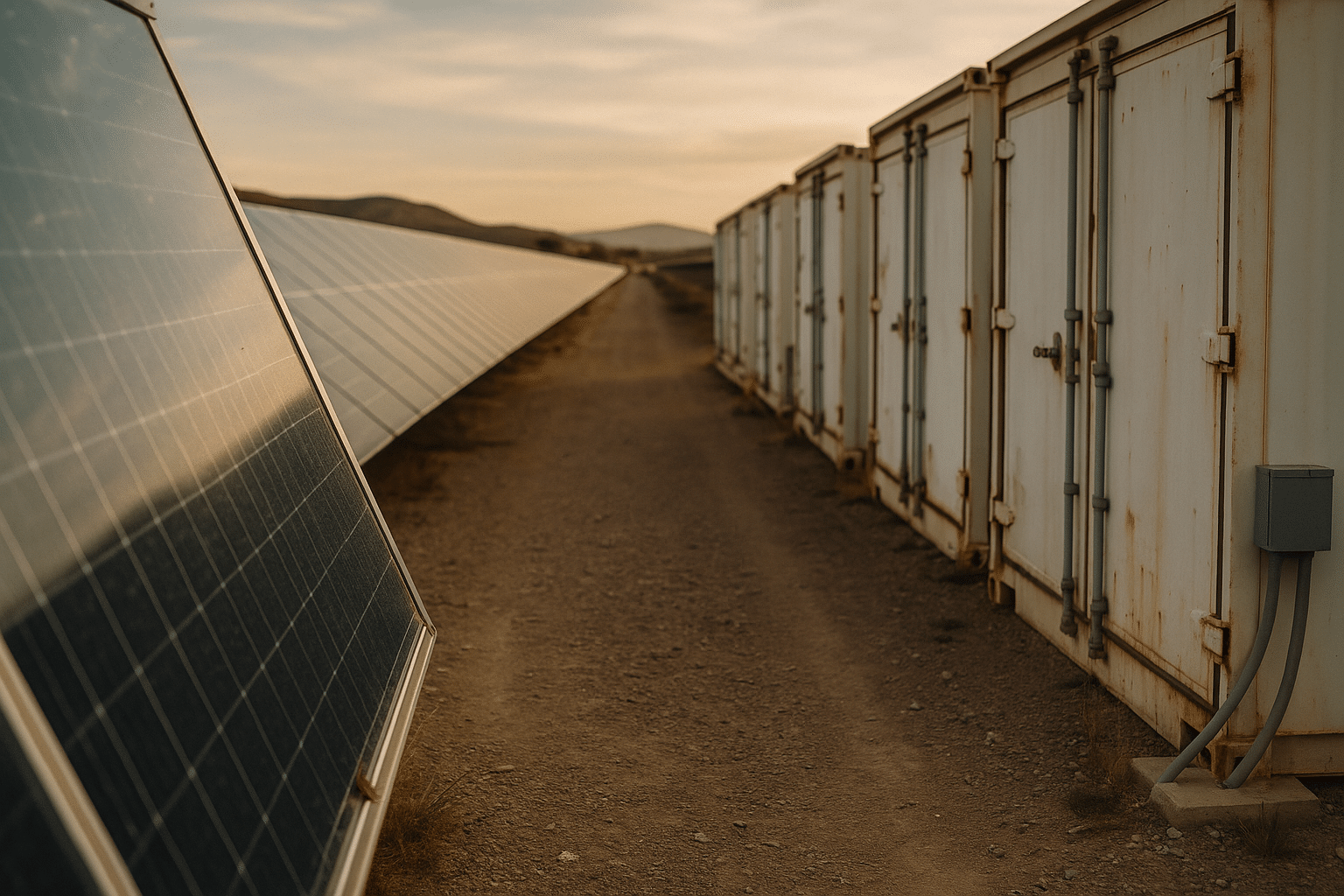

Smart Infrastructure: Architectures, Sensors, and Edge Intelligence

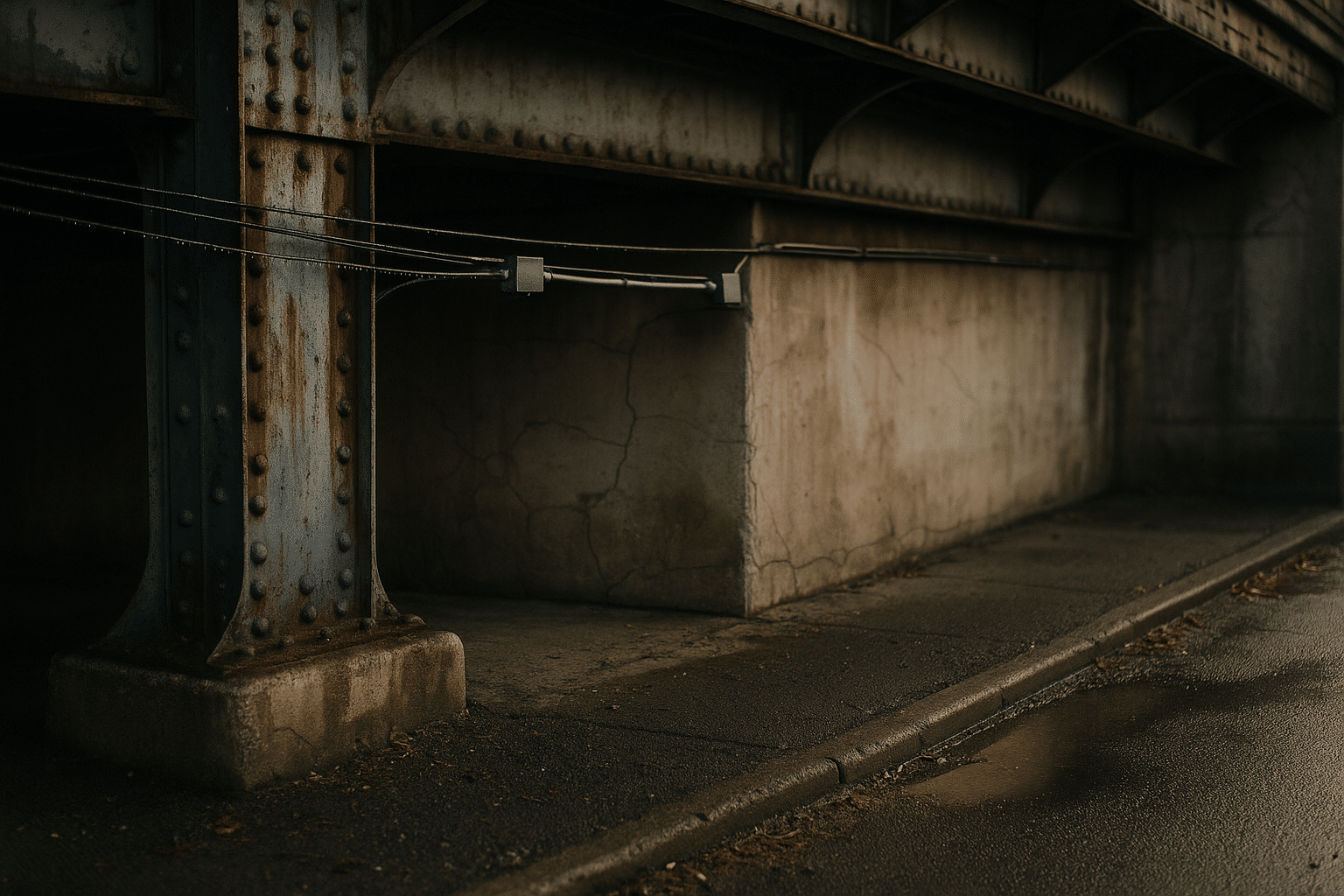

Smart infrastructure weaves sensing, connectivity, compute, and models into a coordinated fabric. A layered architecture helps keep complexity manageable: – Device layer: sensors for vibration, strain, temperature, acoustic emissions, power quality, flow, pressure, and air quality; gateways provide protocol translation and buffering – Edge layer: on‑site compute performs filtering, compression, anomaly detection, and safety interlocks with millisecond latency – Platform layer: scalable storage and feature pipelines enable training, monitoring, and access control – Application layer: dashboards, alerts, and workflow integrations bring insights into daily operations. This pattern separates concerns while allowing incremental upgrades.

Edge intelligence earns its place by reducing bandwidth, improving resilience, and meeting real‑time requirements. For example, a bridge strain gauge network can detect micro‑events caused by heavy vehicles, classify benign versus concerning signatures locally, and send only summaries upstream. In energy systems, local controllers can smooth voltage sags or curtail loads during frequency excursions without waiting for cloud round‑trips. The combination of edge autonomy with central coordination offers a balanced approach: immediate action at the periphery and portfolio‑level optimization at the core.

Digital twins further amplify value by creating living models of assets and networks. These twins ingest telemetry, maintenance records, and environmental data to simulate scenarios—how a heatwave shifts transformer aging, how pump cavitation risk changes with inlet pressure, or how resurfacing schedules affect traffic flow. When twins are linked to actionable workflows, planning becomes a continuous activity rather than an annual exercise. Interoperability and openness matter; data models and APIs should minimize lock‑in and support cross‑vendor assets. Security is foundational: network segmentation, secrets management, certificate rotation, immutable logging, and least‑privilege access reduce blast radius. With careful design, smart infrastructure behaves like a calm, self‑aware system: sensing, learning, and responding without drama.

Conclusion and Phased ROI Roadmap for Operations Leaders

Leaders adopting AI for infrastructure management benefit from a pragmatic, staged approach. Start where the data is reliable and the pain is visible, then expand as value compounds. A workable roadmap looks like this: – Phase 1: instrument priority assets, centralize telemetry, and digitize work management; define KPIs such as MTBF, MTTR, first‑time fix rate, energy per unit output, and leak rate – Phase 2: deploy targeted predictive models for a small asset class; tie alerts to scheduled work and parts logistics; measure lead time and false‑positive cost – Phase 3: add edge inference for latency‑sensitive controls and create a digital twin for scenario planning; codify playbooks for routine events – Phase 4: scale to adjacent assets and sites; introduce optimization across portfolios (e.g., shift maintenance to off‑peak windows); formalize MLOps with drift monitoring and retraining cadences. At each phase, publish before‑and‑after metrics to keep stakeholders aligned.

Governance and trust are not afterthoughts. Establish data ownership, quality standards, and audit trails for models that influence safety or compliance. Encourage human‑in‑the‑loop validation and record operator overrides to refine thresholds. Train teams on interpreting forecasts, not just on using dashboards. Cybersecurity must be continual: patch management for edge devices, anomaly detection on network traffic, backup and recovery tests, and incident drills that include both IT and OT staff. When governance is visible, adoption accelerates because people understand how decisions are made and how risks are handled.

Financially, evaluate total cost of ownership across sensors, connectivity, compute, licenses, and change management. Compare those costs to quantified benefits: avoided downtime hours valued at typical revenue or service penalties; reduced overtime and emergency dispatch; lower inventory carrying costs; and energy savings. Techniques such as net present value (NPV) and payback period clarify trade‑offs without hype. The headline is simple: AI, applied carefully, turns infrastructure from a source of surprise into a source of steady performance. For asset owners, operators, and public stewards, that means fewer disruptions, safer crews, and a system that quietly does its job, day after day.