Exploring Modern AI Technology Stacks and Their Components

Outline: Mapping the Modern AI Technology Stack

Artificial intelligence feels vast because it is a system of systems. The modern stack layers data engineering, modeling approaches, training infrastructure, deployment tools, and governance into a coordinated pipeline that turns raw information into decisions. This outline orients you before we dive deep into machine learning, neural networks, and deep learning, so you can see where each concept fits, how they differ, and when each earns its keep. Picture a relay race: data prepares the baton, models run the distance, infrastructure keeps the track clear, and monitoring calls out when the pace slips.

At the base sits the data layer. It includes acquisition, storage, quality checks, labeling, and feature creation. Above it sits the modeling layer, which ranges from classical machine learning methods to neural networks and deep learning. Training and evaluation form the engine room, governing optimization choices, validation strategy, and metrics. Deployment and operations bring models to users through services, batch jobs, or embedded devices. The final layer is oversight: versioning, audit trails, bias checks, and performance dashboards. Each layer informs the others, because a change in data can ripple through metrics, latency, and even user experience.

In this article, we will follow a practical path that mirrors real projects:

– Start with machine learning foundations: problem framing, tasks, metrics, and pipelines.

– Explore neural networks as function approximators that learn features and patterns with layered transformations.

– Expand to deep learning, where scale, representation learning, and specialized architectures push performance in complex modalities.

– Connect stack choices to constraints: data volume, latency budgets, interpretability needs, and hardware limits.

– Close with a grounded conclusion about selecting and evolving your stack responsibly.

By keeping this outline in mind, you will recognize trade-offs quickly. If you need transparency and modest compute, classical approaches often shine. When patterns are intricate and data is plentiful, neural networks and deeper variants thrive. And when the problem demands sophisticated perception or language understanding, deep learning architectures trained at scale can unlock accuracy. No single layer wins alone; the craft is in composing the layers so they balance accuracy, speed, cost, and accountability.

Machine Learning: Concepts, Tasks, and Practical Pipelines

Machine learning turns data into predictive or descriptive rules through optimization rather than hand-coded logic. The field organizes around problem types: supervised learning predicts labeled targets, unsupervised learning discovers structure, and reinforcement learning optimizes behavior through feedback. In day-to-day work, most teams rely on supervised learning for classification and regression and unsupervised methods for clustering, dimensionality reduction, and anomaly detection. The goal is not to chase novelty but to deliver stable value: forecasts that guide inventory, scores that prioritize outreach, or flags that surface unusual events to a human reviewer.

Reliable practice begins with framing. Define the unit of prediction, the decision cadence, and the cost of errors. Choose metrics that reflect those costs: accuracy is easy to read but can mislead under class imbalance; precision and recall clarify trade-offs between false alarms and misses; area under the ROC curve summarizes ranking quality; mean absolute error and root mean squared error suit numeric targets with different sensitivity to outliers. Split data along time or entity boundaries to mimic real use. Cross-validation can stabilize estimates when data is scarce, while a dedicated holdout offers a final, unbiased check.

A practical pipeline typically includes:

– Data cleaning: reconcile identifiers, handle missingness, and validate ranges.

– Feature engineering: encode categories, aggregate histories, and normalize numeric scales.

– Model selection: compare simple baselines with more flexible learners.

– Hyperparameter tuning: search systematically, logging every trial.

– Evaluation: report both aggregate metrics and subgroup performance.

– Deployment: package the model with feature logic, tests, and monitoring hooks.

Examples make this concrete. A churn model might use three months of interactions to predict attrition in the next month; optimizing for recall ensures fewer customers slip away unnoticed. An anomaly detector for equipment can combine rolling statistics with probabilistic thresholds, escalating only when multiple signals align. For demand forecasting, horizon-aware validation respects seasonality and events. None of these require exotic methods; thoughtful design of features and metrics often yields large gains. When they plateau, neural networks can assume the heavy lifting by learning features automatically, especially where raw signals such as images, audio, and sequences carry information that manual features struggle to capture.

Neural Networks: Architectures, Training Dynamics, and Use Cases

Neural networks approximate complex functions by stacking linear transformations with nonlinear activations. Each layer transforms an input space into a representation where the next layer can solve a simpler problem. At the end, a small layer maps representations to outputs such as classes or numeric values. Training adjusts weights to minimize a loss function using gradient-based optimization, a process made feasible by backpropagation. Activations like rectified linear units help gradients flow; normalization stabilizes intermediate distributions; residual connections shorten the learning path through deep stacks.

Several architectural patterns recur:

– Dense (fully connected) layers for tabular and small-scale tasks.

– Convolutional layers for spatial data, capturing local patterns with shared kernels.

– Recurrent and gated layers for sequences, modeling temporal dependencies.

– Attention mechanisms that weight relationships between elements without fixed locality.

– Encoders and decoders that compress and reconstruct, useful for denoising and representation learning.

Training dynamics matter as much as architecture. Learning rates set the pace; too high, and loss jitters; too low, and training crawls. Mini-batches trade noisy but fast updates for stability. Weight decay and dropout add regularization against overfitting. Early stopping halts before validation loss degrades. Initialization and normalization techniques, chosen thoughtfully, can prevent vanishing or exploding gradients. Monitoring not just loss but gradient norms, activation ranges, and effective batch sizes helps diagnose sluggish learning or silent failures.

Use cases abound where neural networks excel. In vision, convolutions uncover edges, textures, and parts, composing them into objects. In audio, temporal models latch onto phonemes and tone. In time series, hybrid stacks blend convolutional and recurrent elements to detect motifs and long-range effects. For tabular data, dense networks can perform well when interactions are high-order and features are plentiful, though simple models remain competitive when signal-to-noise is modest. Interpretability has improved through techniques that attribute outputs to inputs or internal features, enabling audits that compare contributions across subgroups. The net result is a toolbox that adapts to structure: wherever data has shape, order, or context, a network can be arranged to match it.

Deep Learning: Scaling, Representation Learning, and Real-World Systems

Deep learning magnifies the neural network idea by increasing depth, width, data volume, and compute, unlocking representations that capture nuance across modalities. The payoff shows up in perception tasks, language understanding, recommendation, and control. As models scale, they often follow regularities: performance improves predictably with more data, parameters, and training steps, provided optimization remains stable. This scaling, however, is a negotiation with reality: memory limits, training time, energy use, and latency targets constrain what is feasible. The craft lies in balancing capacity with efficiency so the system is accurate, timely, and affordable.

Several strategies make deep learning practical:

– Transfer learning: start from a model trained on broad data, then adapt to your domain with smaller labeled sets.

– Data augmentation: perturb inputs to expose the model to varied conditions without collecting new examples.

– Curriculum and sampling: order training examples to stabilize learning and emphasize rare but important patterns.

– Regularization at scale: mixup, stochastic depth, and label smoothing can improve generalization under heavy capacity.

– Inference optimizations: quantization, pruning, and distillation reduce model size and latency with manageable accuracy trade-offs.

Production systems must consider the entire path from training to serving. Feature parity ensures the same transformations in training and inference. Batch versus real-time serving depends on how quickly decisions must reach users. Retraining cadence should follow data drift signals, not arbitrary calendars. Shadow deployments can run a candidate model alongside the current one to compare behavior safely. Observability closes the loop: monitor input distributions, calibration, fairness metrics, and stability under load. Ethical considerations are not a separate layer but an integral constraint: document data provenance, seek representative coverage, and track performance across demographics to prevent harm.

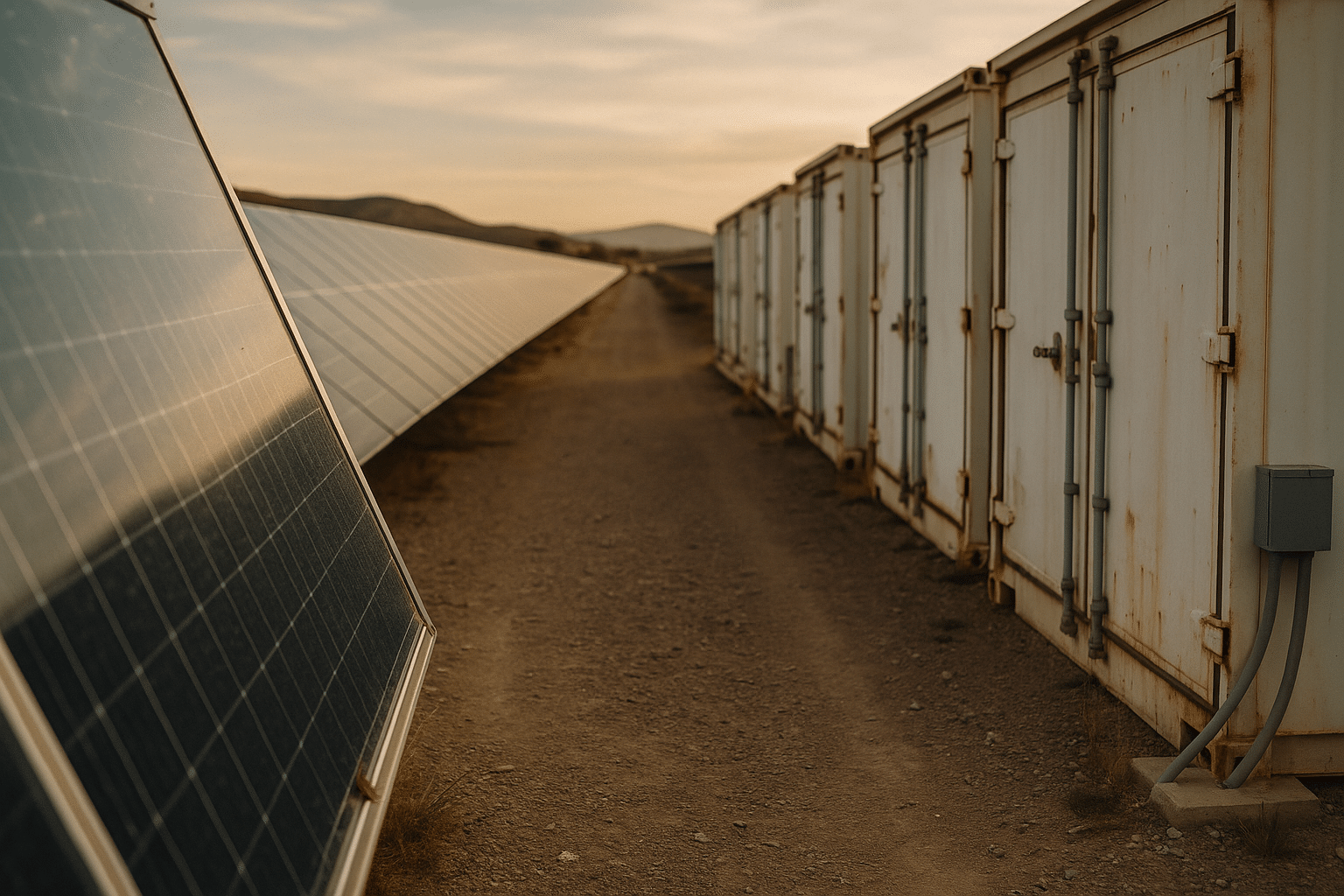

Concrete examples clarify trade-offs. A visual inspection system on a factory line may need sub-50-millisecond inference; quantization and model distillation can achieve that while preserving enough accuracy to detect defects. A document understanding workflow can rely on transfer learning to shrink labeling needs, then scale out with distributed training only if accuracy stalls. In streaming environments, sliding-window evaluation can reflect live conditions better than static benchmarks. Deep learning succeeds when these operational details are treated as first-class design concerns, not afterthoughts tacked on once a model scores well offline.

Conclusion: Choosing and Evolving Your AI Stack

Building with AI is less about chasing novelty and more about deliberate choices that fit the problem, the data, and the constraints. Start simple, measure honestly, and only escalate complexity when the evidence calls for it. Machine learning offers dependable baselines and interpretability; neural networks add flexibility where patterns are layered and subtle; deep learning scales that flexibility for challenging modalities and ambitious accuracy targets. Your stack should reflect your resources and your risk tolerance, not a trend.

A practical approach you can apply today:

– Clarify objectives: what decision will the model inform, how often, and at what cost of error.

– Audit data: identify gaps, check labeling quality, and map potential biases.

– Establish baselines: rule-based or simple models set a floor for value and a check on complexity.

– Iterate methodically: change one variable at a time and log everything for reproducibility.

– Design for operations: plan monitoring, retraining, and rollback before the first deployment.

If you lead a team, create a playbook that encodes these steps, with checklists for validation, documentation, and fairness review. If you are an individual contributor, cultivate habits that make your work legible to others: clear experiments, scripts that rebuild results, and notes on assumptions and failure modes. Both roles benefit from a culture that treats model behavior as a living artifact that evolves with data.

Think of the AI stack like a lighthouse built in layers of stone. The foundation of data keeps it anchored; the bricks of models shape the tower; the lantern room of deployment casts value outward; and the steady maintenance of monitoring keeps the light true through storms. With that structure, you can chart a route from idea to impact, selecting machine learning, neural networks, or deep learning with confidence—because you will know not just how they work, but where each fits in the journey.