Understanding Machine Learning Driven Analytics in Business

Outline:

– Why ML-driven analytics matters in business

– From raw data to decision: the analytics pipeline

– Predictive modeling: methods, validation, and uncertainty

– Applications across functions: marketing, operations, finance, risk

– Execution playbook: people, process, platforms, governance

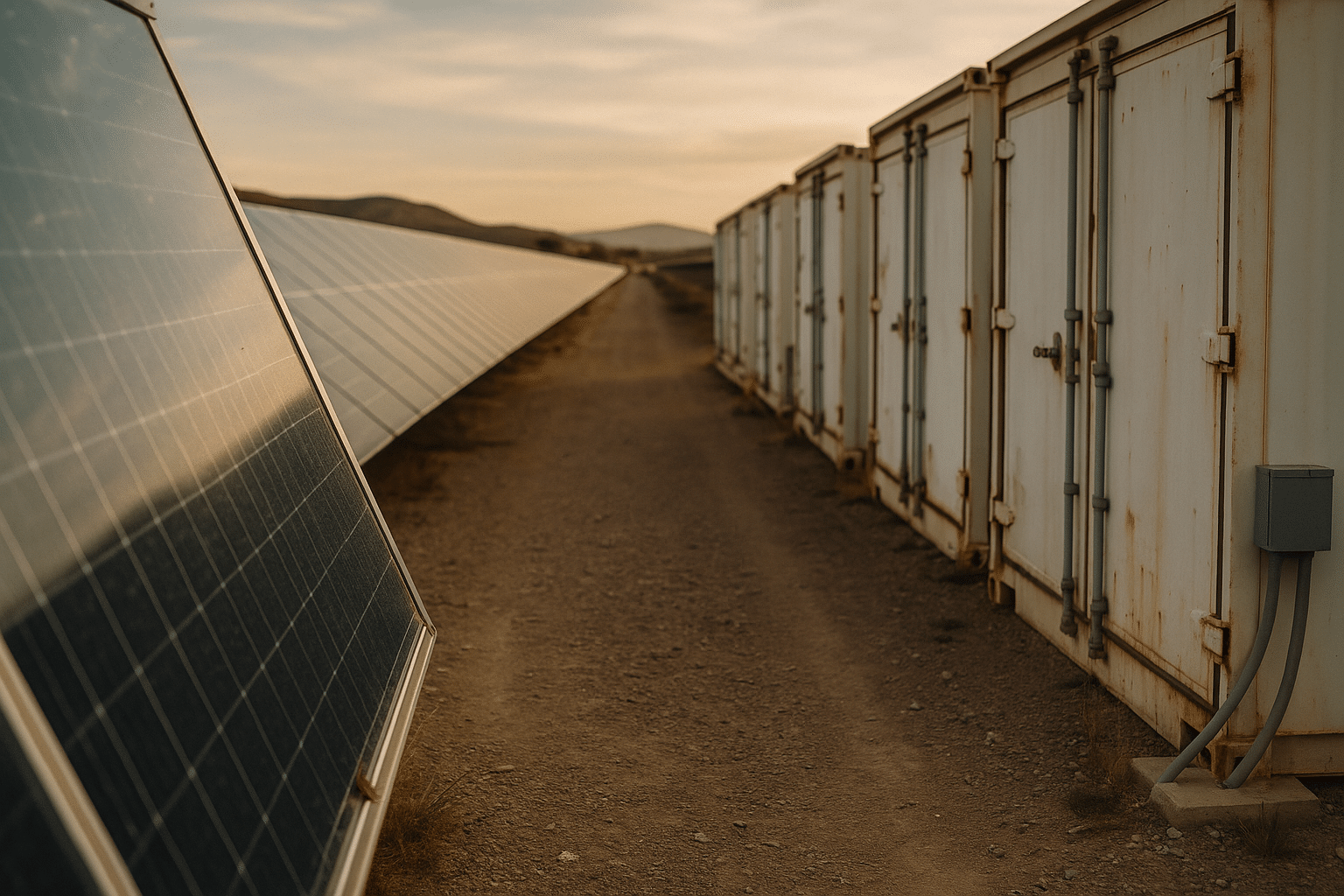

The Business Case for Machine Learning–Driven Analytics

Machine learning, data analytics, and predictive modeling form a practical trio for modern decision-making. Data analytics organizes and summarizes what has happened, predictive modeling estimates what is likely to happen, and machine learning automates pattern discovery to improve those estimates over time. Together, they convert raw observations into timely, actionable insight that helps leaders move from reactive reporting to proactive steering. When deployed with discipline, organizations typically see gains such as faster decision cycles, tighter cost control, and improved customer satisfaction. For instance, better demand forecasts often reduce stockouts by several percentage points, while precision targeting can lift campaign response rates by 10–30% relative to untargeted efforts.

What changes when ML-driven analytics enters the business rhythm is the scale and speed of pattern recognition. Instead of weekly batch reports, models can refresh hourly, pulling signals from clickstreams, sensors, or transactions to catch shifts early. That means a pricing model can react to a sudden spike in returns, a logistics model can reroute around a developing bottleneck, and a risk score can flag unusual behavior before exposure grows. Crucially, this is not about replacing judgment; it is about augmenting it. People set goals, decide trade-offs, and manage constraints, while models surface options ranked by predicted outcomes. The partnership is where value emerges.

Consider three common value levers that are repeatedly observed across sectors:

– Revenue: more relevant offers, improved conversion, and higher retention lift lifetime value with incremental gains in the low single digits accumulating into substantial totals across large customer bases.

– Cost: smarter routing, inventory right-sizing, and preventive maintenance commonly trim 5–15% from operating expenses in targeted domains.

– Risk: earlier warnings on fraud or credit stress reduce loss rates and protect margins, often by cutting false positives and negatives simultaneously.

The business case strengthens further when teams track impact with controlled experiments and robust baselines. Rather than crediting models for coincidental upticks, measure incremental lift against a stable control group and monitor sustainability over quarters, not weeks. A grounded approach builds trust, avoids overclaiming, and sets a cadence for continuous improvement.

From Raw Data to Decision: The Analytics Pipeline

A reliable analytics pipeline turns scattered facts into decisions at production speed. It starts with data design: understanding which questions matter, what signals proxy those questions, and how data will be captured with adequate quality. The essential quality dimensions are straightforward yet powerful:

– Completeness: are required fields present when needed, or do gaps cascade into bias?

– Consistency: do definitions match across systems so that comparisons are meaningful?

– Timeliness: how fresh is the data relative to decision windows?

– Validity and uniqueness: are values within expected ranges and free from unintended duplicates?

Once source data lands in a governed store, cleaning and transformation resolve outliers, standardize units, and align time zones. Feature engineering then creates focused variables that models can learn from: rolling averages, day-of-week indicators, seasonality flags, counts of recent actions, lagged targets, interaction terms, and domain-specific ratios. Good features encode hypotheses about how the world works, translating human insight into numeric form. Equally important is preventing leakage, where future information sneaks into training examples. Clear cutoffs and careful windowing preserve realism.

With features defined, model development proceeds through candidate selection, cross-validation, and diagnostic checks. Teams often try a spectrum of approaches—from linear baselines to tree ensembles and sequence-aware models—while keeping a strong baseline for sanity checks. Data scientists review metrics such as accuracy, recall, precision, area under the ROC curve, calibration, and decision cost under realistic thresholds. Analysts add business context by assessing whether the model’s top signals make sense and whether the recommended actions are feasible given current processes.

Deployment is not the finish line; it is the start of a feedback loop. Models need monitoring for drift in input distributions, performance decay, and data pipeline failures. Practical monitoring dashboards track:

– Stability: how far inputs deviate from training norms.

– Performance: live metrics against outcomes as they arrive.

– Freshness: latency from data arrival to decision.

– Coverage: share of cases where the model can provide a confident recommendation.

A resilient pipeline documents assumptions, versions datasets and models, captures lineage from raw source to decision, and includes a rollback plan. This discipline reduces firefighting, shortens audit cycles, and creates a reproducible path for future improvements.

Predictive Modeling: Methods, Validation, and Uncertainty

Predictive modeling is the craft of turning data into estimates and ranking decisions by expected utility. Method choice should follow problem structure and constraints. For tabular business data with mixed numeric and categorical fields, linear models provide interpretability and fast training, while tree-based ensembles often capture nonlinear interactions with strong accuracy. Time-dependent problems benefit from approaches that respect ordering—rolling windows, autoregressive terms, or sequence models that learn temporal patterns. For highly imbalanced outcomes such as rare frauds or failures, class-weighting, focal losses, or careful sampling strategies prevent models from defaulting to the majority class.

Validating models is about more than a single headline metric. A robust protocol includes:

– Cross-validation that respects time (no shuffling across future and past) for forecasting tasks.

– Separate validation and test sets to avoid incremental overfitting during iteration.

– Calibration checks to ensure predicted probabilities match observed frequencies.

– Cost-sensitive evaluation that reflects business trade-offs, not just statistical neatness.

– Stress tests to probe performance under distribution shift, such as sudden demand dips or policy changes.

Interpretability closes the loop between math and management. Feature importance summaries reveal which signals drive predictions, partial dependence and what-if analysis illustrate how changes in inputs alter the forecast, and simple surrogate models can approximate complex models for explanation purposes. Clear explanations make it easier to socialize model behavior with frontline teams and compliance reviewers, and they help spot spurious correlations before they do damage. Transparency also supports fairness reviews, where teams check for disparate error rates across relevant segments and adjust thresholds or features to mitigate unintended bias.

Uncertainty is an asset when treated explicitly. Confidence intervals, prediction intervals, and ensemble variance offer bounds that guide decision thresholds. For example, a maintenance schedule can prioritize assets with both high failure probability and tight intervals, while deferring assets with wide uncertainty until more data arrives. Scenario analysis magnifies this value: simulate low, medium, and high demand cases, then select actions that remain effective across a range of plausible futures. By presenting point estimates alongside uncertainty, organizations avoid false precision and make choices aligned with their risk appetite.

Practical Business Applications and Measured Impact

The power of machine learning and predictive modeling becomes tangible in day-to-day decisions. In marketing, uplift modeling identifies customers likely to respond because of the offer rather than despite it, improving return on spend by focusing on truly persuadable audiences. Next-best-action engines sequence messages and channels to reduce fatigue and increase relevance, often driving 2–5% incremental conversion and 3–8% improvements in retention when paired with thoughtful experimentation. In sales, lead scoring ranks opportunities by conversion probability and expected value so teams prioritize outreach where it matters most.

Operations present rich opportunities as well. Demand forecasting at the product-location level helps align inventory with purchasing patterns, easing stockouts while curbing overstock. Real-world programs commonly cut working capital tied up in excess inventory by mid-single-digit percentages. Predictive maintenance monitors vibration, temperature, and usage cycles to flag components trending toward failure; targeted interventions can reduce unplanned downtime by 10–30% compared with run-to-failure approaches. Route optimization shifts deliveries to efficient paths that respect time windows and vehicle capacity, trimming fuel usage and overtime costs.

In finance and risk, early-warning indicators surface accounts likely to miss payments, enabling tailored outreach that lowers loss severity without harming customer experience. Anomaly detection highlights unusual transactions for review, balancing catch rate with reviewer workload. Pricing analytics considers willingness to pay, elasticity, and competitive conditions to suggest brackets that lift margin while maintaining volume. Workforce planning uses attrition predictions and hiring lead times to balance staffing, reducing vacancy gaps and training bottlenecks.

To make impact durable, successful teams pair models with process redesign. That means creating clear playbooks for actions triggered by scores, ensuring frontlines have authority to act, and aligning incentives with the new decision logic. It also means running controlled tests:

– Define primary and guardrail metrics before launch.

– Hold out a stable control group for a fair comparison.

– Run tests long enough to cover seasonality and learning effects.

– Track spillovers so wins in one area do not mask losses elsewhere.

By tying model outputs to operational levers and measuring incremental lift over time, organizations build a compounding advantage rather than a one-off dashboard glow-up.

Execution Roadmap: People, Process, Platforms, and Governance

A practical roadmap starts with outcomes and works backward. Clarify the business question, identify decisions that will change if better information arrives, and set target metrics with a realistic baseline. With that in place, staff the initiative with complementary roles:

– Data engineers to build reliable pipelines and manage storage.

– Analysts to frame questions, craft features, and translate results.

– Data scientists to design models and validation.

– ML engineers to deploy, monitor, and scale.

– Product leaders to connect solutions with processes and user needs.

Process discipline reduces rework. A typical lifecycle includes business scoping, data discovery, feature engineering, model development, validation, deployment, and monitoring, each with entry and exit criteria. Version everything—data snapshots, code, models, and configuration—so that results are reproducible and rollbacks are painless. Establish model registries and promotion gates to ensure only validated artifacts reach production. Automate as much as feasible: scheduled training jobs, reproducible notebooks converted to pipelines, and policy-driven access controls that protect sensitive fields.

Governance safeguards value and reputation. Document intended use, assumptions, known limitations, and expected monitoring thresholds. Set review cadences for performance, drift, and fairness, and require sign-off from relevant stakeholders before widening exposure. Privacy-by-design practices minimize the use of personally identifiable information, favor aggregated signals when possible, and retain data only as long as necessary. Security reviews cover data ingress and egress, key management, and audit trails. Clear incident playbooks define how to pause a model, revert to a baseline rule set, and communicate with affected teams.

Measuring return on investment keeps momentum. Attribute impact with controlled experiments or difference-in-differences analyses where randomized trials are impractical. Track both direct and second-order effects: revenue, cost, and risk outcomes plus cycle time, employee satisfaction, and model operating costs. Early efforts may show modest gains—think 1–3 percentage points of margin improvement or a few percentage points of cost reduction—but the compounding effect across multiple decisions and quarters creates meaningful enterprise value. Keep a backlog of incremental experiments, retire low-yield models, and reinvest in those that consistently demonstrate lift.

Finally, cultivate literacy across the organization. Short workshops, simple glossaries, and clear internal case studies turn models from black boxes into shared tools. When teams understand what the model can and cannot do, adoption rises, feedback improves, and the flywheel turns faster.