Understanding White Label AI SaaS for Businesses

Orientation and Outline: White Label AI SaaS in Context

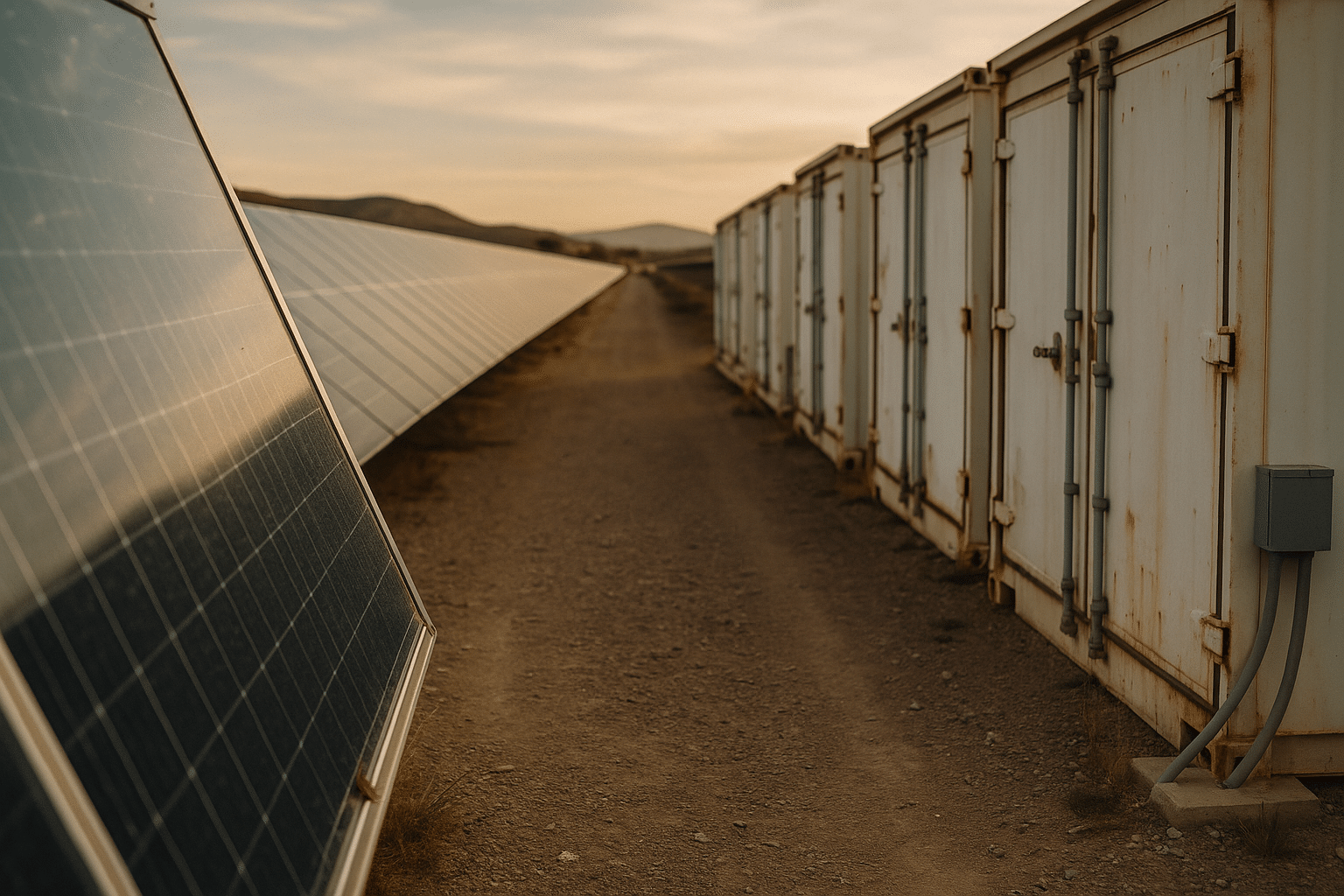

White label AI software-as-a-service allows a business to ship AI-powered capabilities—like smart search, recommendations, or support assistants—under its own brand while a specialized provider supplies the underlying platform. This division of labor matters because building secure, scalable AI from scratch is slow and capital intensive, yet modern markets reward speed, reliability, and polish. A white label approach connects those dots: you focus on market fit and customer experience; the platform supplies model hosting, data pipelines, and operational tooling. To keep this guide practical, we will begin with a clear outline and then expand each part with examples, comparisons, and actionable insights.

Outline of this article:

– The automation opportunity: where AI can reliably take over repetitive tasks, reduce cycle time, and improve consistency without compromising oversight.

– The scalability model: how multi-tenant architectures, elastic compute, and smart caching deliver predictable performance as usage grows.

– The customization toolkit: from theming and feature toggles to workflow builders, prompts, and fine-tuning options.

– Integration, data, and governance: connecting systems, protecting privacy, and aligning with compliance expectations.

– Metrics, ROI, and roadmap: measuring value, prioritizing features, and planning phased rollouts.

Three ideas anchor the whole journey. First, automation is a means, not an end: it should eliminate toil but preserve human judgment for exceptions and strategy. Second, scalability is both technical and economic: a service that handles peak load yet ruins margins is not truly scalable. Third, customization must be safe and sustainable: the more knobs you expose, the more you need guardrails to prevent complexity from spiraling. Think of your white label AI stack as a city: automation is the transit system that keeps everything moving, scalability is the power grid that expands with demand, and customization is the zoning plan that lets neighborhoods develop character without chaos.

We will reference practical benchmarks, such as typical latency targets for AI inference and cost-to-serve considerations, and we will distinguish near-term wins from longer-term investments. Wherever possible, we will surface trade-offs to help you choose confidently: when to automate end-to-end versus human-in-the-loop; when to shard services; when to configure versus customize. With the map in view, let’s start where most teams see immediate gains: automation.

Automation: Turning Repetition into Reliable Throughput

Automation in white label AI SaaS spans data collection, model operations, content generation, and support workflows. The goal is consistent output at lower variance, not unchecked autonomy. For customer-facing features, a common pattern is human-in-the-loop review for high-impact actions (e.g., billing changes), and full automation for low-risk tasks (e.g., tagging, deduplication, or routing). Behind the scenes, orchestration automates data ingestion, model selection, and inference at scale, so your product team can deliver new capabilities without micromanaging pipelines.

Typical candidates for automation include:

– Data normalization and enrichment: standardizing fields, detecting anomalies, and augmenting records with derived attributes.

– Content drafting: first-pass emails, product descriptions, summaries, or knowledge snippets that humans refine before publishing.

– Support triage and self-service: classifying tickets, suggesting answers, and escalating edge cases with context attached.

– Risk checks: pattern-based flags on transactions or sign-ups that trigger secondary review rather than outright blocks.

Compared to manual processes, organizations commonly report shorter cycle times and fewer handoffs once they standardize steps into a pipeline. For example, automating support triage can shrink first-response times from hours to minutes, especially when common intents are recognized early and routed correctly. Documentation creation benefits too: AI can assemble structured drafts from existing assets, letting subject matter experts concentrate on accuracy and tone rather than repetitive formatting.

Key design choices define the quality of automation. Confidence thresholds and fallback behavior determine when the system proceeds, seeks clarification, or defers to a human. Logging and replay tools help teams inspect decisions, reproduce issues, and iterate quickly. Batch versus streaming ingestion affects freshness: real-time enrichment enables faster experiences but may cost more. And prompt management or policy templates reduce drift by making updates systematic rather than ad hoc.

Measuring impact turns automation from a guess into a disciplined practice. Track cycle time per task, first-pass accuracy, rework rate, and assisted-versus-fully-automated ratios. If you are automating drafting, look at edit distance from first draft to final publish, and time saved per document. If you are automating support, track deflection rate, average handle time, and customer satisfaction after automated responses. Start with narrow, well-scoped flows, instrument them thoroughly, and scale the patterns that show durable gains. With that foundation, you are ready to think about how those automated tasks perform under growth: scalability.

Scalability: Elastic Foundations for Growth Without Friction

Scalability ensures your white label AI features respond consistently as user counts and workloads rise. In practice, this means designing for both horizontal elasticity and predictable tail latencies. For AI-centric workloads, the heaviest operations often involve model inference and vector search, so capacity planning should account for concurrency spikes, cold starts, and cache effectiveness. A multi-tenant architecture separates shared control planes from isolated data planes, enabling efficient operations while keeping customer data segmented.

Core levers for scale include:

– Concurrency and autoscaling: scale workers by queue depth or request rate, with warm pools to avoid cold-start penalties.

– Caching and reuse: store embeddings, partial results, or recurrent prompts when safe to reduce repeated computation.

– Queueing and backpressure: smooth bursty traffic, prioritize critical paths, and provide graceful degradation during peaks.

– Observability: track 95th and 99th percentile latency, error budgets, cache hit ratio, and cost per request by tenant.

Reliability under load is as much about trade-offs as raw horsepower. For instance, you might accept slightly stale recommendations during a traffic surge if it means preserving low latency and consistent user experience. Rate limiting can be tenant-aware, ensuring that high-volume customers receive guaranteed throughput while preventing noisy-neighbor effects. Likewise, read-heavy paths benefit from replication or precomputation, while write-heavy paths may require idempotent APIs and conflict resolution strategies.

Cost-aware scaling is essential. A feature that delivers great performance but consumes disproportionate resources will strain margins, especially in markets with price-sensitive buyers. Track cost-to-serve at a granular level: compute, storage, egress, and third-party API usage per feature and per tenant. When targets are clear, optimization becomes purposeful: compress embeddings within acceptable accuracy bounds, segment models by task complexity, and push infrequent jobs to off-peak windows. Many teams find that a layered approach—fast path for common requests, slower path for complex cases—balances experience and economics.

Finally, design for operational resilience. Incident runbooks, synthetic checks, and canary rollouts limit blast radius. Observability should connect symptoms to causes quickly: a latency spike in inference should correlate with model version changes, cache invalidation, or upstream ingestion. Document service-level objectives (e.g., 99.9% monthly availability for core endpoints) and align them with customer-facing commitments. When scale becomes methodical rather than heroic, your customization efforts can flourish without jeopardizing performance.

Customization: Tailoring the Experience Without Losing Maintainability

Customization is where white label AI SaaS proves its value: you deliver a product that looks, speaks, and behaves as if it were built in-house. The challenge is to allow meaningful shaping—branding, workflows, prompts, and policies—without creating a brittle system that is hard to upgrade. A sensible approach is to think in layers of flexibility, exposing configuration first, composition second, and code-level extension last. This gives customers room to differentiate while keeping core services stable and supportable.

Common customization layers include:

– Branding and theming: colors, typography, logos, and layout presets that respect accessibility and responsive design.

– Feature flags and plans: enable or disable modules by tier, role, or tenant to align value with pricing.

– Workflow builders: visual steps, rules, and triggers to adapt processes like onboarding, approvals, or support escalations.

– Prompt and policy templates: predefined patterns for tone, guardrails, and compliance that admins can refine safely.

– Data and model choices: pick from baseline models for routine tasks or higher-capacity options for complex reasoning when justified by use cases.

Two safeguards keep customization productive. First, schema-driven configurations ensure that every option has validation, sensible defaults, and documentation. This reduces misconfiguration risk and speeds updates. Second, a permission model separates who can view, change, or publish settings, with audit trails for accountability. For AI features, content safety policies and output filters should be adjustable within controlled bounds, allowing industry-specific terms while preventing disallowed categories.

From a product perspective, it’s helpful to classify requests as configure, compose, or extend. Configure covers sliders, dropdowns, and toggles that are safe to change frequently. Compose assembles building blocks—like “ingest → classify → route → notify”—into end-to-end flows. Extend is reserved for high-value cases where custom code or plugins are warranted; these should go through review, testing, and versioning. By funneling most needs into configure and compose, you keep upgrades simple and enable a steady drumbeat of improvements without breaking tenant setups.

Measure the impact of customization on outcomes, not just aesthetics. Track activation time (how long to reach first meaningful value), feature adoption by role, and support tickets related to configuration. If tenants with tailored workflows show higher retention or faster cycle times, that’s a signal to invest further in those controls. The right toolkit feels like a well-organized workshop: every tool has a place, guardrails keep novices safe, and experts can still craft something remarkable.

Integration, Governance, ROI—and a Practical Conclusion

Integration ties your white label AI features into the fabric of daily work. Start with the systems that hold truth—customer records, product catalogs, knowledge bases—and define reliable sync paths. Webhooks and event buses create responsive loops where AI outputs can trigger follow-up actions in other apps. Idempotent APIs and clear versioning simplify retries and upgrades. For data flows, document schemas and retention policies so that ingestion, enrichment, and deletion remain consistent across tenants.

Governance gives stakeholders confidence to adopt. Treat privacy and security as product features: encryption in transit and at rest, role-based access controls, and audit logs visible to admins. Maintain data isolation by tenant and be explicit about what powers global models versus what remains local. Human oversight should be built-in, not bolted on: reviewers need queues, sampling rules, and the ability to override or retrain. Map your controls to recognized compliance frameworks so procurement can assess readiness efficiently, and keep a living record of risk assessments and mitigations.

To justify investment, quantify value with a simple model. Consider a baseline where a team spends N hours per week on a task at an average loaded rate; after automation, hours drop by a fraction f while quality improves to reduce rework by r. Annual benefit approximates N × (f + r) × weeks × rate, minus platform fees and incremental compute. Add customer experience gains measured through faster response times, higher conversion on recommendations, or increased self-service resolution. Focus on a handful of metrics that reflect business outcomes, such as cost-to-serve, time-to-resolution, and retention uplift.

Adopt in phases to balance ambition and risk:

– Phase 1: pilot one or two automations in low-risk domains with robust measurement.

– Phase 2: expand to customer-facing features once reliability and oversight patterns are proven.

– Phase 3: deepen customization, standardize governance, and optimize cost-to-serve as usage scales.

– Phase 4: iterate on the roadmap using evidence from metrics, support feedback, and sales conversations.

Conclusion for business leaders: White label AI SaaS is a pragmatic path to intelligent products that look and feel like your own, without carrying the full weight of platform engineering. Use automation to reclaim time and consistency, design for scalability so growth is graceful and affordable, and invest in customization that lets your market see itself in your product. With careful integration, clear guardrails, and honest measurement, you can move faster, reduce risk, and create enduring value for customers and teams alike.