Understanding White Label AI SaaS Solutions for Businesses

Outline and Strategic Overview

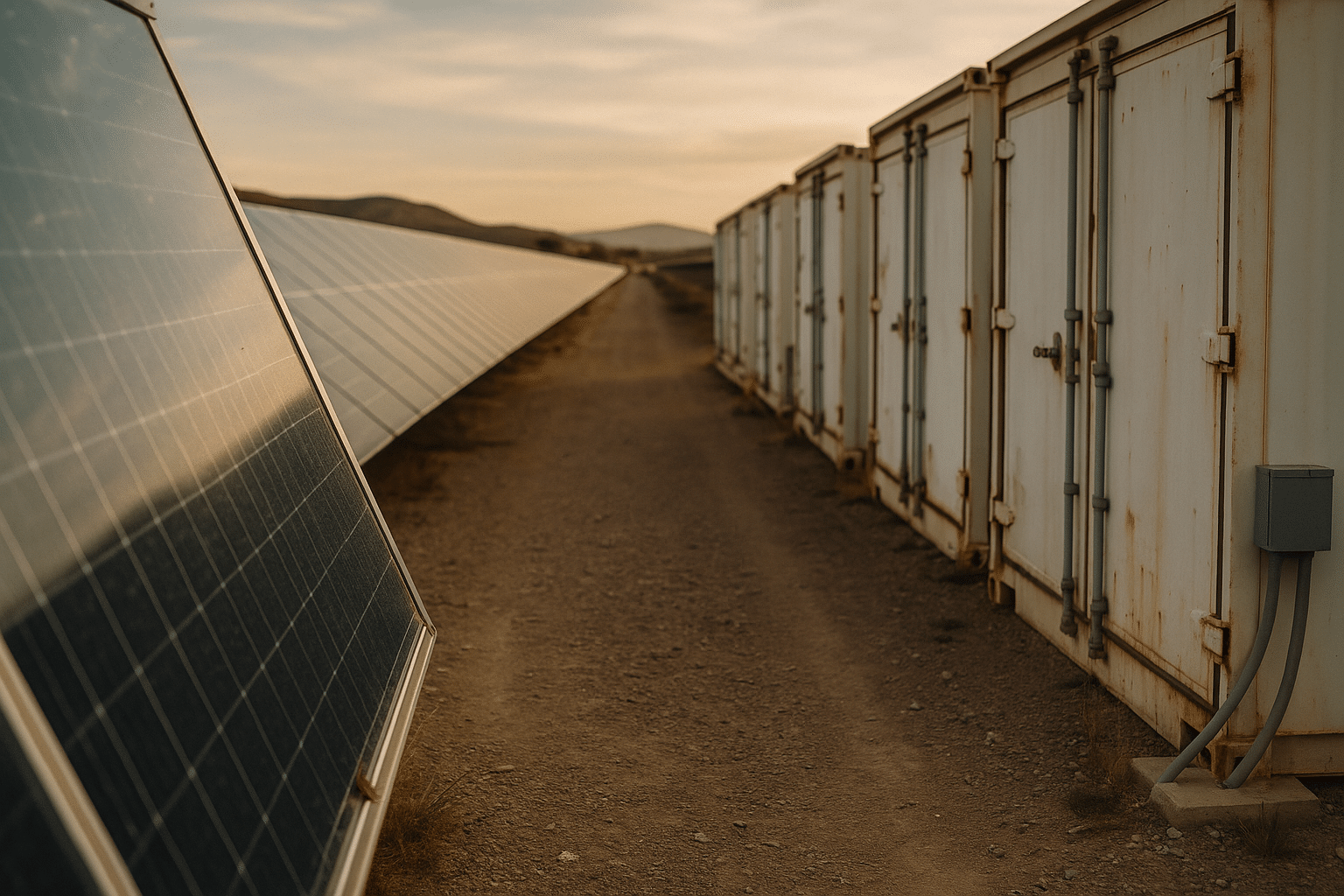

White label AI SaaS lets organizations deliver intelligent features under their own banner without owning every layer of the stack. Think of it as a sturdy bridge: the provider engineers the foundations—models, APIs, security, uptime—while your team crafts the surface experience—workflows, policy rules, and the visual layer customers see. This approach compresses time to market, shifts upfront capital to predictable operating costs, and gives access to continuously improving AI capabilities. It also introduces new responsibilities around data governance, model oversight, and lifecycle management, which this article unpacks in detail.

Here’s the roadmap we will follow to connect strategy with practice and make trade‑offs explicit rather than accidental:

– Automation: how to transfer repetitive tasks from people to systems without losing judgment or accountability

– Customization: how to align the solution with domain rules, brand voice, and regulatory constraints

– Scalability: how to grow from a pilot to global usage with stable performance and cost control

– Implementation playbook and ROI: how decision‑makers de‑risk adoption, measure outcomes, and plan governance

Why this matters now: AI workloads have moved from experiments to production use. Teams that rely only on custom builds often face long lead times, uneven quality, and maintenance toil. Teams that lean entirely on off‑the‑shelf tools can ship quickly but may hit walls on differentiation and compliance. White label AI SaaS sits between those poles. You purchase a robust, multi‑tenant platform with APIs, orchestration, and monitoring, then configure it deeply to match your environment. The result is a portfolio of intelligent capabilities—summarization, recommendations, classification, extraction, conversational flows—embedded in your product or operations with your rules and identity.

This article emphasizes evidence‑oriented choices. You will see practical examples, risk checks, and metrics you can track from day one. By the end, you should be able to map your own requirements to an automation backlog, a customization plan, and a scalability envelope, and then run a pilot that is small enough to learn quickly yet realistic enough to surface true constraints.

Automation: Turning Repetition into Reliable Outcomes

Automation in a white label AI SaaS context means orchestrating models, rules, and integrations so routine work happens with minimal human intervention while preserving oversight. The target is not to replace expert judgment but to filter, enrich, and route work so people focus where impact is highest. Typical starting points include email or ticket triage, document classification and extraction, lead scoring, content moderation, and knowledge retrieval. In many teams, these flows consume hours per day; even modest accuracy gains and latency reductions can create measurable capacity.

Consider a support pipeline: messages arrive, the AI classifies intent, extracts key entities, and proposes responses drawn from a vetted knowledge base. Confidence thresholds determine whether answers go out automatically or are queued for review. Over time, feedback from agents retrains or retunes the system. Many organizations report time savings in the range of 20–40% for targeted tasks once confidence thresholds are tuned and exception paths are clear. Error rates typically decline when the system enforces consistent policy checks before action.

Automation options vary by technique:

– Deterministic rules excel where policies are crisp and data is structured

– Statistical models handle classification and ranking under uncertainty

– Retrieval‑augmented generation can draft context‑aware text while grounding claims in approved sources

– Human‑in‑the‑loop gates ensure accountability for high‑risk actions

Compared with traditional scripting or robotic process automation, AI‑driven flows are more adaptable to messy inputs (free‑form text, mixed languages, ambiguous intents). The trade‑off is the need for monitoring: model drift, prompt regressions, and data coverage gaps can erode performance quietly. A white label platform helps by providing built‑in evaluation harnesses, policy filters, and analytics—so you can track precision, recall, latency, cost per transaction, and override rates. Good practice is to define “quality budgets” alongside cost budgets: for example, set minimum precision for automated responses and pause auto‑send if confidence dips below a threshold.

To get started, define a small but valuable slice: one document type, one channel, one language. Instrument the end‑to‑end path with clear metrics such as median response time, containment rate (tasks resolved without escalation), and audit pass rate (percentage of outputs meeting policy). Publish those numbers weekly. The goal is a repeatable template you can replicate across teams, not a fragile one‑off. When automation is approached as a discipline—measured, governed, and iterated—it becomes a reliable engine, not a novelty.

Customization: Aligning AI with Domain, Brand, and Governance

Customization is where a white label AI SaaS becomes your solution rather than just a generic toolkit. It spans three layers: the experience customers see, the logic that encodes your policies, and the data that grounds outputs in your domain. The art is to configure deeply without forking the product into an unmaintainable variant. Most modern platforms expose no‑code and low‑code options for common patterns, plus APIs and webhooks for advanced integrations.

Think through customization dimensions explicitly:

– Data: connectors to your repositories, retrieval rules, redaction of sensitive fields, and data retention windows

– Models: choice of base model family, domain adaptation via prompt templates or lightweight fine‑tuning, and guardrails for style and tone

– Workflow: branching logic, confidence thresholds, human review steps, and escalation policies

– Access: role‑based permissions, audit trails, and regional data controls for regulatory compliance

– Localization: terminology, spelling, and formatting aligned to each market

– Branding: wording patterns, UI components, and visual identity that match your product

For many companies, the fastest wins come from prompt and retrieval design. By pulling facts only from approved sources and inserting policy disclaimers at the right moments, teams reduce hallucination risk and keep responses consistent with legal guidance. Lightweight fine‑tuning can help with style or entity definitions, but rigorous evaluation is essential to avoid overfitting. A helpful rule is to treat prompts and evaluation datasets as first‑class assets: version them, review them, and test them just like code.

Customization is also about guardrails. Establish prohibited content categories, escalation rules for risky intents, and quotas for automated actions. Map these to controls in the platform so policy is enforced by the system, not remembered by individuals. Many teams see adoption accelerate when output style mirrors their brand voice and when the system respects the same approval flows staff already use. In internal surveys, it is common to see higher trust scores when control surfaces are visible—toggle for auto‑send, a clear explanation panel, and an easy “flag for review” action.

Finally, plan for change. New regulations, new data sources, and new product lines will arrive. Customization should minimize time‑to‑change: parameterize thresholds, keep domain terms in a central glossary, and expose configuration as versioned templates. The more you can ship updates without redeploying core infrastructure, the more flexible—and safe—your AI will be.

Scalability: From Pilot to Global Footprint Without Surprises

Scaling a white label AI SaaS is a blend of technical engineering and financial planning. On the technical side, you want predictable latency, throughput, and isolation as tenants grow. On the financial side, you want unit economics that remain healthy under peak usage. A practical mindset treats scalability as a series of envelopes: limits for concurrency, storage, tokens or characters processed, and cost per outcome.

Key technical practices include stateless service layers, autoscaling, and granular rate‑limits per tenant or per use case. Caching validated intermediate results—embeddings, retrieval snapshots, or classification outcomes—reduces latency and spend when similar queries recur. For retrieval‑heavy applications, partitioning indexes by tenant and region improves performance and residency compliance. Queueing and back‑pressure ensure bursts don’t cascade into failures, while idempotent operations prevent duplicates when retries occur. Observability is non‑negotiable: trace end‑to‑end request paths, tag logs with tenant identifiers, and track latency percentiles rather than averages.

Reliability comes from simple, well‑tested patterns:

– Health checks and circuit breakers to isolate degraded dependencies

– SLOs for uptime, latency, and accuracy, with clear error budgets

– Blue‑green or canary releases so changes reach a small slice before global rollout

– Multi‑region failover for critical workloads, aligned with data residency rules

Cost scalability is just as important. Model‑driven workloads can fluctuate with input size, so design prompts and context windows thoughtfully. Trimming irrelevant context reduces spend and often increases accuracy. Batch operations where possible, and align pricing plans with actual value units (for example, per resolved task rather than per raw request). FinOps dashboards that show cost per workflow, cost per tenant, and cost per successful outcome help product owners make trade‑offs with data rather than intuition.

As adoption widens, organizational scalability matters too. Clear runbooks, shared component libraries, and “golden paths” prevent each team from reinventing the same integration. Periodic load tests with realistic data illuminate weak links before customers do. Finally, treat limits as design tools: publish supported volumes, file sizes, and languages, and provide graceful degradation paths. When customers know what to expect, satisfaction rises even under heavy load because the system behaves consistently.

Implementation Playbook and Conclusion for Decision‑Makers

A successful white label AI SaaS rollout starts small, grows deliberately, and keeps stakeholders aligned. Begin with a pilot that targets one or two measurable outcomes—faster response time in a support queue, higher accuracy in document extraction, or greater self‑service containment for common requests. Define a baseline for each metric, then set realistic quarterly targets. Identify a cross‑functional team with clear roles: product owner, domain expert, data steward, security lead, and operations engineer. Time‑box the pilot to 6–10 weeks so you can decide confidently whether to expand.

Run the playbook step by step:

– Discovery: inventory data sources, privacy constraints, and candidate workflows; choose the smallest slice that still exercises key risks

– Design: map prompts, retrieval rules, thresholds, and human‑in‑the‑loop gates; draft evaluation sets for accuracy and policy compliance

– Build: integrate via APIs or connectors; instrument analytics from day one; document assumptions in the runbook

– Prove: run A/B or before/after tests; collect user feedback; compare cost per outcome against the baseline

– Harden: add monitoring, alerts, and quotas; tune thresholds; document escalation and rollback procedures

To frame ROI, compare three cost blocks: platform fees, integration work, and ongoing operations. Offset these against time saved, error reduction, and revenue impacts (new upsell paths, improved conversion, or higher retention). A conservative approach treats only a portion of time saved as immediately redeployable and values accuracy gains where audits confirm fewer rework cycles. For example, if a team processes 50,000 documents per quarter and automation lifts straight‑through processing from 20% to 45%, the avoided manual passes and reduced cycle time often yield a clear payback within a few quarters—assuming governance remains tight and exception handling is efficient.

As a closing perspective, think of white label AI SaaS as a well‑engineered scaffold: sturdy enough to climb quickly, flexible enough to adapt as your building changes. Automation gives you reliable lift, customization ensures the structure matches your blueprint, and scalability keeps the whole project safe as more people step onto the platform. For product leaders and operations teams, the path forward is pragmatic: start with one valuable workflow, measure relentlessly, harden the controls, and expand only when the data supports it. Do that, and you’ll grow an AI capability that feels less like a gamble and more like disciplined progress.